Today, GitHub sent out security notices to owners of projects using old jackson-databind versions (older than 2.8.11.1 and 2.9.5). These notices pertain to this issue. I have talked about its relevance before on IRC, but since it is getting more attention now, I will describe it here again.

The Problem

The “bug” comes from using the so-called default typing. This feature allows a user to deserialize subclasses (or even Object) without specifying the full possible type hierarchy. Consider this model:

interface I {

}

@Data

class A implements I {

private int i;

}

@Data

class B implements I {

private boolean b;

}

Now, if we wish to serialize the interface I, you usually need to specify some sort of type info. This is typically done through an annotation on the interface:

@JsonTypeInfo(use = JsonTypeInfo.Id.NAME)

@JsonSubTypes({@JsonSubTypes.Type(value = A.class, name = "A"), @JsonSubTypes.Type(value = B.class, name = "B")})

interface I {

}

If we now serialize an object of type I, we get a result like this:

new ObjectMapper().writerFor(I.class).writeValueAsString(new A())

{"@type":"A","i":0}

Deserializing this JSON works as expected.

Default typing

This annotation-based registration is fine for small use cases, but can get cumbersome if the types are in different modules, or there are just a lot of them, or it would be bad style to reference them from the parent class. Normal Java serialization does not have this problem (it just carries the actual dynamic class name with it), so this could be a barrier for adoption of Jackson for previous Java serialization users.

The problem here is that we want people to migrate from Java serialization. There are lots of reasons, most of them compelling.

Enter default typing. With default typing, we don’t need the type info annotations at all:

new ObjectMapper().enableDefaultTyping().writerFor(I.class).writeValueAsString(new A())

["net.hawo.tv.tvui17.A",{"i":0}]

(Ignore the fact that this is now suddenly an array – this is one of the ways jackson may include type info)

The idea is simple: Include the full class name in the json, and you can simply get the proper class at runtime! Sounds good, right?

The Vulnerability

Well, turns out this is not that good of an idea. Java serialization does a very similar thing (though it still requires the named class to be serializable, which jackson doesn’t) and this has lead to what feels like a third of all serious security vulnerabilities in Java applications, ever. The problem lies with the fact that Java classpaths are often massive, and allowing any class on that classpath to be deserialized at will can be disastrous since it exposes a huge attack surface. If you can get any class on the classpath to execute code when deserialized with jackson, you have successfully achieved remote code execution.

This is exactly what happened with Jackson. Some classes that were common on user classpaths could be deserialized to execute arbitrary code. The fix for this issue is basically a blacklist of a few of these classes that could be exploited. Blacklists are not a solution, though, and since this first list, the list has been amended several times. The maintainers are playing whack-a-mole here, and in my opinion it is a waste of everyone’s time to be adding all exploitable classes to this list.

The Solution

Our experience with this same issue in the Java serialization world tells us not to deserialize untrusted data. Luckily, Jackson is much more secure than Java serialization – if you don’t use default typing. The only acceptable solution to this issue in the long run is: do not use default typing to deserialize untrusted data. Default typing is rarely necessary or even a good idea.

Unfortunately, online resources saying this are sparse. Default typing is an “easy” solution, and many people simply do not have the security awareness to see the issue with it – they will stumble over stackoverflow answers such as this one and simply enable default typing to easily serialize Object fields. The documentation of Jackson also doesn’t highlight this as much as it should.

Two alternatives to default typing exist in Jackson:

- Normal, annotation-based typing as shown above. This allows you either to use the full name as with default typing, or even specify your own name for greater compatibility (you can later change the class name without affecting the serialized representation). This is the “standard” solution, and the appropriate one for most use cases.

- Should you not know the possible subtypes of a class you wish to deserialize in advance, you can use the rich registerSubtypes API to dynamically add the types you desire. These types could be detected through an existing module system you are already using, or using something like SPI.

All in all, I am a bit dissatisfied with the attention this issue has gotten. The issue is a security vulnerability by design, and anyone using default typing should have been aware of it. Luckily, default typing is not on by default. I do not have statistics but I would be surprised if many people used it or knew of its existence in the first place, and so I find the attention GitHub has given this a bit over the top – the biggest thing these notifications will spread is uncertainty about Jackson, so this article was an attempt at clearing up what it’s actually all about.

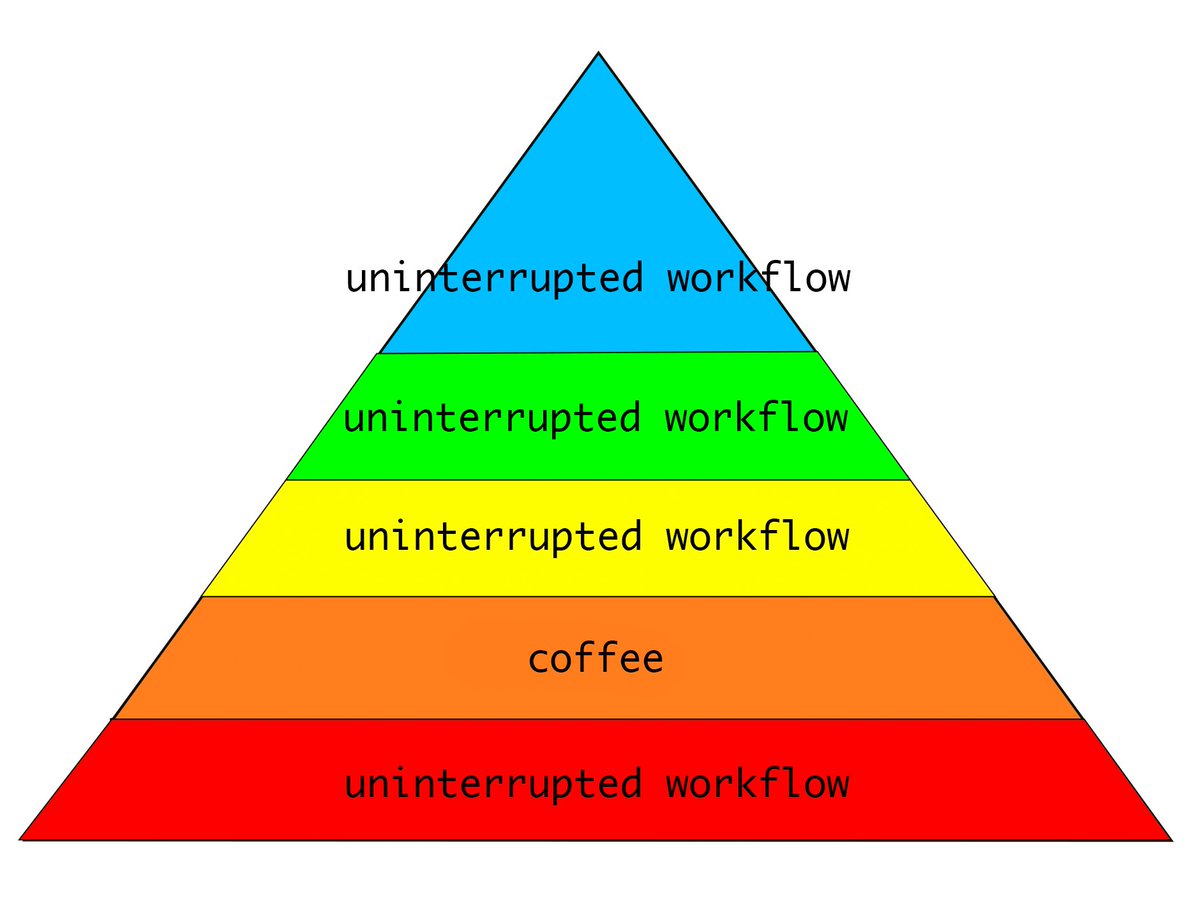

One gets the impression that the developer’s needs weren’t being met when designing that graph.

One gets the impression that the developer’s needs weren’t being met when designing that graph.